In the fall of 2021, whistleblower Frances Haugen shed light on Facebook’s internal documents, revealing concerning evidence that the company was aware of the potential negative impact of its platforms on young users but took insufficient action to address it.

This revelation caught the attention of parents, particularly considering the significant number of American adolescents, approximately 21 million, who are active on social media.

As a result, families have initiated legal action against social media platforms. Since our initial report in December, the number of families pursuing lawsuits has grown to over 2,000.

It is anticipated that more than 350 lawsuits will proceed this year, targeting TikTok, Snapchat, YouTube, Roblox, and Meta—the parent company of Instagram and Facebook.

In this report, you will hear from some of the families involved in these lawsuits. We want to caution you that the content discussed may be distressing, but we believe it is crucial to include it as parents assert that these posts had a detrimental impact on their children’s mental health and, tragically, contributed to the loss of some young lives.

Kathleen Spence: They are holding our children hostage and actively preying on them.

Sharyn Alfonsi: Preying on them?

Kathleen Spence: Yes.

The Spence family has filed a lawsuit against Meta, the parent company of Instagram. Kathleen and Jeff Spence claim that Instagram played a significant role in their daughter Alexis’ struggle with depression and the development of an eating disorder when she was just 12 years old.

Kathleen Spence: We gradually realized that we were losing our daughter. We had no understanding of how deeply social media had impacted her. She was being drawn into a hidden and dark world.

The situation began when Kathleen and Jeff, both middle school teachers from Long Island, New York, provided their 11-year-old daughter Alexis with a cell phone for communication purposes after school.

Kathleen Spence: We had established strict rules regarding phone usage right from the beginning. The phone was never allowed in her room at night. We would keep it in the hallway.

Jeff Spence: We monitored the phone and set up restrictions.

Alexis Spence: I would wait for my parents to fall asleep, and then I would either sit in the hallway or sneak the phone into my room. I wasn’t allowed to use many apps, and my parents had set up parental controls.

Sharyn Alfonsi: How quickly did you find a way around those restrictions?

Alexis Spence: Pretty quickly.

Hoping to connect and keep up with friends, Alexis joined Instagram. The Instagram policy mandates users are 13 years old. Alexis was 11.

Sharyn Alfonsi: I thought you had to be 13.

Alexis Spence: It asks you, “Are you 13 years or older?” I checked the box “yes” and then just kept going.

Sharyn Alfonsi: And there were never any checks?

Alexis Spence: No. No verification or anything like that.

Sharyn Alfonsi: If I had picked up your phone would I have seen the Instagram app on there?

Alexis Spence: No. There were apps that you could use to disguise it as another app. So, you could download like a calculator, ‘calculator’, but it’s really Instagram.

Jeff Spence: There was always some workaround.

Sharyn Alfonsi: She was outwitting you.

Jeff Spence: Right, she was outwitting us.

Kathleen Spence: She was addicted to social media. We couldn’t stop it. It was much bigger than us.

At the age of 20, Alexis reveals that her innocent search for fitness routines on Instagram led her into a dark and harmful world.

Alexis Spence: It initially started with fitness-related content. However, the algorithm started showing me diets, which eventually shifted towards promoting eating disorders.

Sharyn Alfonsi: What kind of content were you seeing?

Alexis Spence: People would post pictures of themselves looking extremely sick or excessively thin, using those images to promote eating disorders.

These are a few examples of the images that were sent to Alexis through Instagram’s algorithms, which analyze her browsing history and personal data to push content she never directly requested.

Sharyn Alfonsi: What did you learn from exploring these pro-anorexic websites?

Alexis Spence: I learned a lot. I discovered information about diet pills and how to lose weight at the age of 11, during a time when my body was supposed to naturally go through changes. It was challenging.

Sharyn Alfonsi: When did it go from something you were looking at to something you started doing to yourself?

Alexis Spence: It only took a few months.

Sharyn Alfonsi: Did it make it seem normal to you? Did you think, “Well, others are doing it”?

Alexis Spence: Absolutely. I felt like they needed help, and I needed help too. Instead of receiving the assistance I needed, I was getting guidance on how to continue down a harmful path.

By the age of 12, Alexis had developed an eating disorder as a result of her experiences on Instagram. Despite feeling depressed, she would spend five hours a day scrolling through the app.

She drew a picture of herself in her diary, depicting her surrounded by her phone and laptop, with thoughts of self-hate and suicidal ideation.

Alexis Spence: I was struggling with my mental health, depression, and body image. Social media did not contribute positively to my confidence. If anything, it made me hate myself.

During her sophomore year, Alexis posted on Instagram expressing her thoughts of not deserving to exist. A friend alerted a school counselor, leading to a distressing encounter for her parents.

Kathleen Spence: That day was the scariest day of our lives. I received a call from the school, and they showed me Instagram posts where Alexis expressed her desire to harm herself and commit suicide.

If Instagram has the necessary software to protect its users, why was this not flagged? Why was it not identified?

An internal document that had not been previously published reveals that Facebook was aware that Instagram was exposing girls to harmful content.

It details an internal investigation where an Instagram employee created a fake account as a 13-year-old girl seeking diet tips.

The investigation led to content and recommendations promoting dangerous behaviors related to eating disorders.

Other memos indicate that Facebook employees raised concerns about company research showing that Instagram made one in three teen girls feel worse about their bodies, and that teens using the app experienced higher rates of anxiety and depression.

Sharyn Alfonsi: How did you feel when you first saw the Facebook papers?

Kathleen Spence: It was sickening. I was there, struggling and hoping to save my daughter’s life, while they had all these documents behind closed doors that could have protected her. They chose to ignore that research.

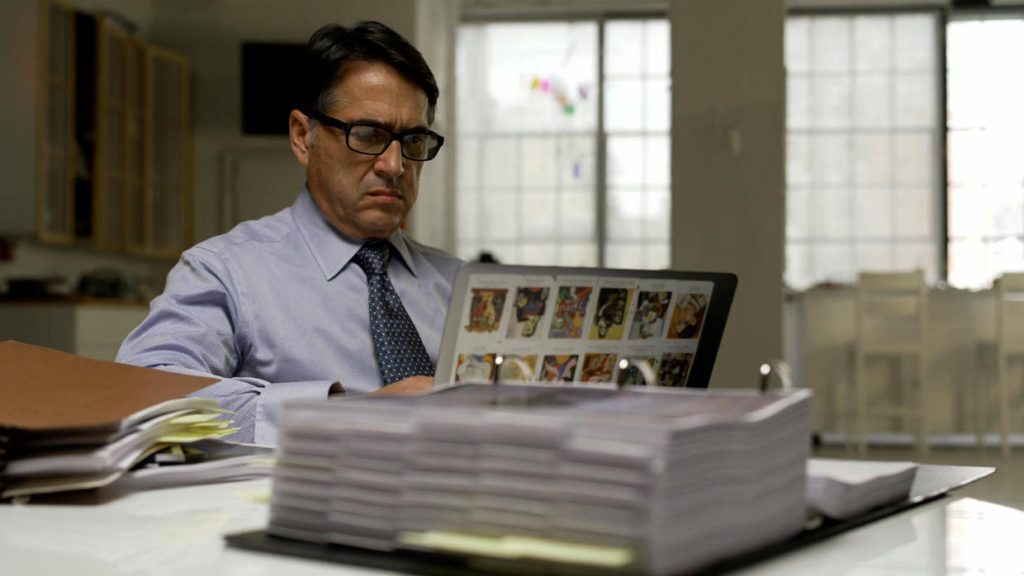

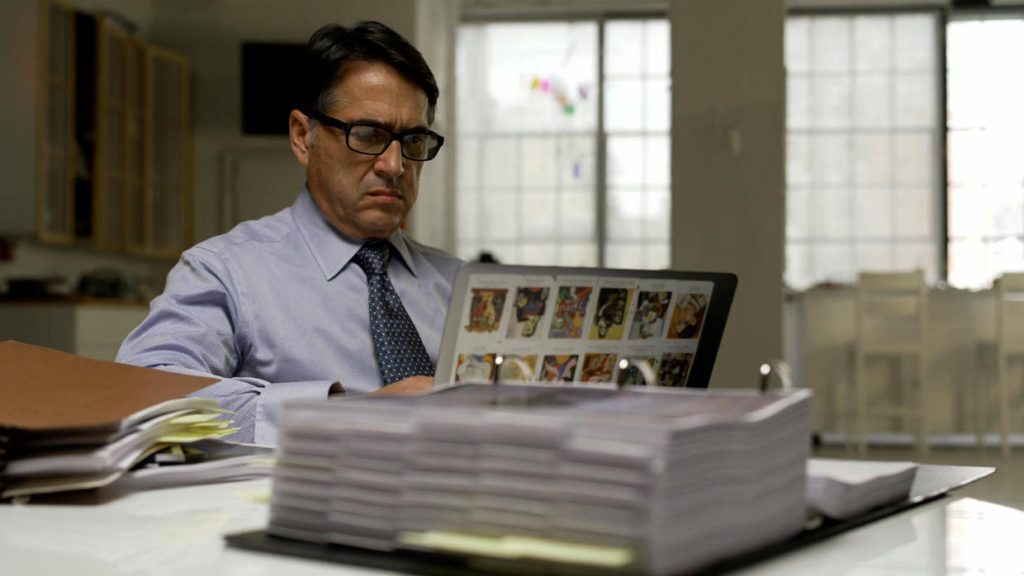

Attorney Matt Bergman represents the Spence family and founded the Social Media Victims Law Center after reading the Facebook papers. He is now working with over 1,800 families pursuing lawsuits against social media companies like Meta.

Matt Bergman: Time and time again, when given the choice between the safety of our kids and profits, they always choose profits.

Bergman and his team are preparing for the discovery process in the federal case against Meta and other social media companies. The lawsuit, which is expected to be a multi-million dollar suit, is primarily focused on changing policy rather than seeking financial compensation.

Bergman, with his background as a product liability attorney, argues that the design of social media platforms is intentionally harmful to children.

Matt Bergman: These companies have deliberately designed a product that is addictive. They understand that the longer children stay online, the more money they make. It doesn’t matter how harmful the content is.

Sharyn Alfonsi: So the fact that kids ended up seeing disturbing content wasn’t accidental; it was intentional?

Matt Bergman: Absolutely. This is not a coincidence.

Sharyn Alfonsi: Isn’t it the parent’s responsibility to monitor these things?

Matt Bergman: Of course, parental responsibility is important. But these products are specifically designed to circumvent parental authority.

Sharyn Alfonsi: So what needs to be done?

Matt Bergman: Firstly, there should be age and identity verification. The technology exists to verify age and ensure that people are who they claim to be. Secondly, the algorithms should be turned off. There is no reason why someone like Alexis Spence, who was interested in fitness, should be directed towards anorexic content. Thirdly, there should be warnings so that parents are aware of what is happening. Realistically, social media platforms can never be 100% safe. However, these changes would make them safer.

The Roberts family shares their perspective on the safety of social media for children. Englyn Roberts, their 14-year-old daughter, had written about her struggles with self-worth, relationships, and mental health online. One night in August 2020, just hours after her parents said goodnight to her, Englyn took her own life. Her parents discovered a distressing video on her phone, which they believe influenced her decision.

Toney Roberts: Finding your child hanging and being in disbelief is unimaginable. It feels like it can’t be true. And ultimately, I blame myself.

Sharyn Alfonsi: Why do you blame yourself?

Toney Roberts: Because I’m her dad. I’m supposed to know.

Toney Roberts discovered an Instagram post that was sent to Englyn, featuring a video of someone pretending to hang themselves. This led him to believe that Englyn got the idea from the video she saw on Instagram.

Toney Roberts: If that video hadn’t been sent to her, she wouldn’t have known how to do it in that particular way.

In the fall of 2021, whistleblower Frances Haugen shed light on Facebook’s internal documents, revealing the concerning knowledge that the social media giant had about the potential negative impact of its platforms on youth.

The documents indicated that Facebook was taking minimal action to address these issues. This revelation caught the attention of parents, particularly those with approximately 21 million American adolescents using social media.

As a result, thousands of families have initiated lawsuits against social media companies. The number of families pursuing legal action has grown to over 2,000, with more than 350 lawsuits expected to proceed this year.

The lawsuits target platforms such as TikTok, Snapchat, YouTube, Roblox, and Meta (the parent company of Instagram and Facebook).

One family, the Spences, is suing Meta, claiming that Instagram played a role in their daughter Alexis’ descent into depression and the development of an eating disorder when she was only 12 years old.

Despite imposing strict rules on Alexis’ phone usage, such as not allowing the phone in her room at night and implementing parental controls, Alexis found ways to circumvent the restrictions.

Her innocent search for fitness routines on Instagram led her into a dark world, where she was exposed to content promoting eating disorders and unhealthy body images.

Alexis spent up to five hours a day scrolling through Instagram, despite the negative impact it had on her mental health.

She developed multiple Instagram accounts and eventually developed an eating disorder by the age of 12.

The algorithms on the platform, which processed her browsing history and personal data, pushed content to her that she had never directly requested.

These algorithms directed her towards pro-anorexic content and harmful advice on weight loss.

The situation escalated when Alexis posted on Instagram that she didn’t deserve to exist, prompting a friend to alert a school counselor.

Alexis’ parents were devastated when they were shown Instagram posts expressing her desire to harm herself.

They questioned why Instagram’s software, which is supposed to protect users, did not flag or identify such concerning content.

Internal documents, previously undisclosed, revealed that Facebook was aware that Instagram was exposing girls to dangerous content, including content related to eating disorders.

Matt Bergman, the attorney representing the Spence family, believes that social media platforms intentionally design their products to be addictive.

He argues that the platforms prioritize profits over the safety of children, even when they are aware of the harmful nature of the content.

While parental responsibility is important, Bergman contends that these platforms are designed to evade parental authority.

He suggests implementing age and identity verification, disabling harmful algorithms, and providing clear warnings to parents to make social media platforms safer.

Another family, the Roberts, is also taking legal action against Meta after their daughter Englyn tragically took her own life.

They discovered an Instagram post depicting someone pretending to hang themselves, which they believe influenced Englyn’s decision.

Despite Meta’s claims of prioritizing teen safety and prohibiting self-harm content, the video remained on the platform for over a year before being taken down.

The Roberts family questions who holds these social media companies accountable for failing to adhere to their own policies.

Although Meta, Snapchat, and TikTok have not provided specific responses or statements regarding these lawsuits in the given transcript, it is crucial to consult reliable news sources or official statements from the companies to obtain the most accurate and up-to-date information.

The surgeon general has recently issued a warning about the potential harm to the mental health of youth caused by social media and has called for stricter standards and actions from both the government and tech companies. This growing concern may prompt changes within the industry to better protect young users.

Statement from Meta

“We want teens to be safe online. We’ve developed more than 30 tools to support teens and families, including supervision tools that let parents limit the amount of time their teens spend on Instagram, and age verification technology that helps teens have age-appropriate experiences. We automatically set teens’ accounts to private when they join Instagram, and we send notifications encouraging them to take regular breaks. We don’t allow content that promotes suicide, self-harm, or eating disorders, and of the content we remove or take action on, we identify over 99% of it before it’s reported to us. We’ll continue to work closely with experts, policymakers, and parents on these important issues.” – Antigone Davis, Vice President, Global Head of Safety, Meta

Statement from Snapchat Global Head of Platform Safety Jacqueline Beauchere

“The loss of a family member is devastating, and our hearts go out to people facing these tragedies, no matter the circumstances. We designed Snapchat to be different from traditional social media, built around visual messaging between real friends and avoiding the most toxic features that encourage social comparison and can take a toll on mental health. We know that friendships are a critical source of support for young people, especially when dealing with mental health challenges, and we continue to work with leading experts on in-app tools and resources to support our community – especially those who may be struggling.”

TikTok only provided background information and declined to provide a statement in response to our story.

Produced by Ashley Velie. Associate producers, Jennifer Dozor and Elizabeth Germino. Edited by April Wilson.