San Francisco-based prime broker FalconX plans to use OpenAI’s technology to develop a chatbot called Satoshi for its clients. Rather than merging artificial intelligence (AI) with cryptocurrencies, FalconX aims to use LLM-based technology to help investors. FalconX CEO Raghu Yarlagadda says Satoshi can answer client queries such as “What are the three biggest differences between two blockchain platforms?” or “What is the delta between Sharpe ratios for a Bitcoin basis strategy or a Bitcoin hold strategy over two weeks?”. The bot can generate investment ideas based on a client’s historical trading activity, portfolios, and interests.

While the technology is still in its early stages, advancement is expected to come quickly. FalconX is a natural bridge to bring OpenAI’s technology into crypto, thanks to the company’s engineering head Prathab Murugesan, who spent 2.5 years at Google working on getting machine-learning technologies into products such as Gmail and Google Docs. Yarlagadda, who began work at Google in 2014 on the current CEO Sundar Pichai’s Chrome OS team, said that Google would be a machine-learning company, a complete and radical departure from the norm.

Machine-learning algorithms cannot tell traders what to do next, but LLMs, such as those used by OpenAI and Google, can build generative AI on top of machine learning by making it possible for these platforms to take reams of unfiltered and imprecise data and respond to any query. Yarlagadda says the company had been working on Satoshi for over nine months, pre-dating the ChatGPT hype, but it ran into roadblocks until OpenAI cleared the way. FalconX uses OpenAI’s API stacks and infrastructure to test and build Satoshi and frequently interacts with the firm’s account management and engineering teams. The company aims to make ChatGPT’s LLM a base layer for a wide range of uses and says it will integrate other LLMs beyond those offered by OpenAI, such as Google’s Bard.

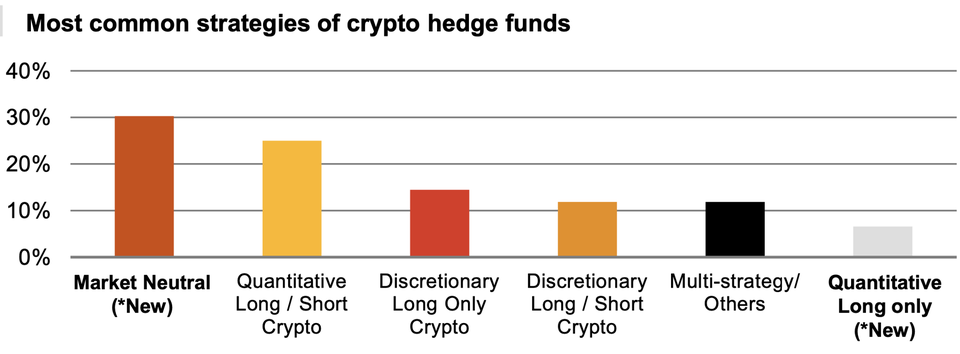

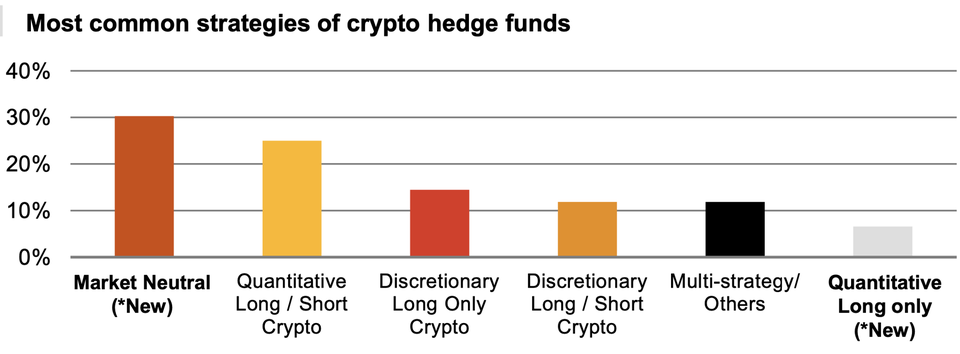

Satoshi is an AI-powered platform designed to help hedge funds, venture capital funds, family offices, brokers, and retail traders compete on an even playing field with big quantitative operations. While most hedge funds may use a quantitative approach, 45% of them still use a discretionary approach to at least some of their trading, and other categories of traders rely heavily on discretion. Satoshi aims to cater to these groups of traders by providing them with relevant information and tools to make informed trading decisions.

The platform aims to achieve this in three ways. Firstly, it can survey all relevant news and information across traditional and social media to provide briefings catered to a client’s interests or holdings. For example, it can answer questions like “How did my portfolio perform over the past 24 hours?” or “Who are the three biggest social media influencers posting about a certain asset, and what are they saying?”.

Secondly, users can test trading strategies by asking the platform questions like, “How much will it cost me to put on a $1000 short position on Bitcoin?” or “What is the best strategy for purchasing $5 million of ether without paying more than 25bps?”. Finally, Satoshi will eventually have buy/sell buttons built into the platform so that the user can immediately manifest those strategies.

However, it should be noted that much of what Satoshi aims to achieve is aspirational. The platform remains tested and is not yet integrated with necessary media such as exchange order books. It can also not produce trading charts and other essential tools for pro users.

A potentially explosive blind spot for the platform would likely be its inability to assess leverage levels or financial solvency for trading counterparties in crypto. One key lesson from the collapse of major crypto players such as BlockFi, Three Arrows Capital, Genesis Global Trading, and FTX is that many of them were indebted to each other and took on massive amounts of leverage to maximize gains in what was thought to be a forthcoming crypto supercycle.

Furthermore, there are unresolved problems with generative AI, including privacy issues. The technology can cause hallucinations, or at least their virtual equivalent. AI platforms providing wrong answers in a matter-of-fact and convincing fashion can be hazardous if they provide incorrect trading strategies.

One example of such a phenomenon is ChatGPT’s infamous hallucination. It falsely claimed that a George Washington University law professor was accused of sexual harassment, even concocting a Washington Post story to support the claim. The ramifications could be even more critical for users should they trade large amounts of money based on hallucinations provided by Satoshi.

Also Read: